Database performance is very important for achieving maximal ergonomics of the website. Everyone knows that one of the most important usability condition is the response time. Everyone knows also that the database may be the bottleneck. In the worst case it may even cause inaccessibility of the website.

Let’s have a closer look at this problem.

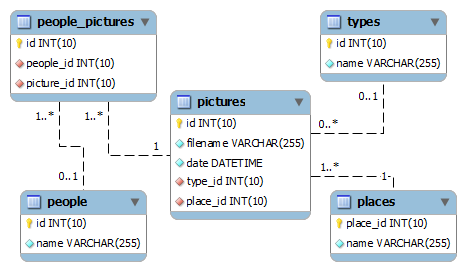

I used again my sample The Lost database but with greater number of records (the table pictures has now 1 000 000 records and the table people_pictures has 3 000 000 records).

The size of the database is now 118,3 Mb.

Here you can find a simple PHP script to generate such number of records.

Imagine that now we are about to create a simple website for this collection. The typical queries we could use are as follow:

Query 1: Show all types and the number of pictures of each type. (We want to use this for creating a side menu where the type’s names with the links and the number of pictures will be given in brackets for every type.)

select types.name, count(pictures.id) from types, pictures where pictures.type_id=types.id group by types.name;

Query 2: Show all characters with the number of pictures in which they are tagged. (This query can be also used for another type of side menu.)

select people.name, count(people_pictures.id) from people join people_pictures on (people.id=people_pictures.people_id) group by people.name;

Query 3: Show 20 newest pictures (the creation date counts) with all characters tagged in every picture (it can be the main element on the website).

select filename, group_concat(name) from people_pictures join pictures on (people_pictures.picture_id=pictures.id) join people on (people_pictures.people_id=people.id) group by filename order by date limit 1,20;

These queries are very simple but they perform very bad. (On my computer: query1 – 22s, query2 – 84s and query3 reaches the time-out – 240s).

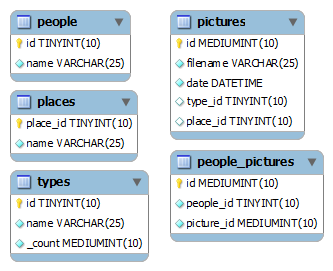

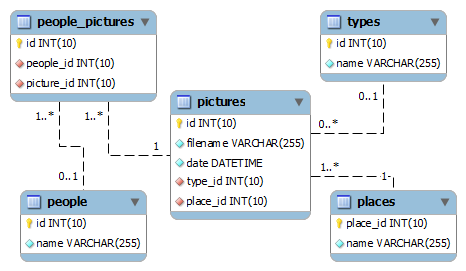

Design makes difference

At the beginning let’s have a look at the types we use. I purposely created the first version of database with the “default” types and sizes. Most of them we can substitute with something less heavy. For example: for the tables types, places and people the id column will rather not have more than 100 rows, so we can use TINYINT instead of INT. We can also limit all the columns name to 25 characters. All changes in the pictures below:

This way we already earn some time for first two queries. Query1 takes now 2s and Query2 26s. Query 3 still reaches the time limit.

More information about MySQL data types is here.

Indexes

Indexes should be created carefully. In most cases, added correctly, may dramatically speed up the queries. Generally speaking we should create indexes on these columns that we use for JOINs and clauses WHERE, ORDER BY and GROUP BY.

Query 3 joins tables people_pictures with people and pictures (relation many-to-many). It seems reasonable to create indexes on columns people_id and picture_id in people_pictures table. The result set is grouped by pictures.filename and ordered by pictures.date. We can create more two indexes on these columns. Such modification decrease the execution time to 196 s.

In some cases, indexes may provoke increase of execution time. Let’s have a look at Query 1. It uses column pictures.type_id to join pictures with their types. However if we create index on pictures.type_id we notice that the execution time for this query increases to 13s .

Using indexes for joining dictionary-like tables produces overhead. The index may take more space that the actual table data. In such cases it is faster to read sequentially from table, because this minimizes disk seeks (especially when the dictionary table is short).

For Query 1 we can just delete the index from pictures.type_id. But what happened when we use the same index in few queries and it increase the execution time only for some of them? Fortunately there is an easy solution. We can modify the query by adding ignore index hint.

The final results of this optimization phase are Query1: 2s, Query 2: 20s and Query3: 196s - still too much.

This is what we can achieve optimizing on spec. The better way is to look closer at every particular query.

Some useful links:

Explain and other techniques.

One of the most powerful tool for optimizing is EXPLAIN statement. It helps to analyze particular queries by showing the their execution plan.

Query 1

Let’s have a look at the EXPLAIN output for this query.

|

id |

select_type |

table |

type |

possible_keys |

key |

key_len |

ref |

rows |

Extra |

|

1 |

SIMPLE |

pictures |

ALL |

NULL |

NULL |

NULL |

NULL |

1000000 |

Using temporary; Using filesort |

|

1 |

SIMPLE |

types |

ALL |

PRIMARY |

NULL |

NULL |

NULL |

4 |

Using where; Using join buffer |

From the query execution plan we can see that unnecessary file sort is used. This is caused by GROUP BY clause. If you use GROUP BY, output rows are sorted according to the GROUP BY columns as if you had an ORDER BY for the same columns. To avoid the overhead of sorting that GROUP BY produces, add ORDER BY NULL.

Most of the execution time is taken to process the pictures table, while the one that is more interesting for this query is the types table.

To overcome this we can provide an extra column to the types tables – types._count. Every time a picture is added, deleted or updated this column will be updated as well for a proper picture type. This way it will store the current number of pictures for every type.

To keep the data coherent we can use procedures and triggers that works inside the database and are not depended on any external interface.

We need two procedures – one to increase the counter of 1 another to decrease it of 1.

DELIMITER $$ CREATE DEFINER=`root`@`localhost` PROCEDURE `inc_pictures_type_counter`(IN type_id INT) BEGIN UPDATE types SET _count=_count+1 WHERE id=type_id; END

DELIMITER $$ CREATE DEFINER=`root`@`localhost` PROCEDURE `dec_pictures_type_counter`(IN type_id INT) BEGIN UPDATE types SET _count=_count-1 WHERE id=type_id; END

Now triggers. When we add a new picture of certain type the database has to call the procedure increasing the counter for that type - inc_pictures_type_counter.

DROP TRIGGER IF EXISTS lost.on_insert_picture_type CREATE TRIGGER lost.on_insert_picture_type AFTER INSERT ON lost.pictures FOR EACH ROW BEGIN CALL inc_pictures_type_counter(NEW.type_id); END

When we delete a picture the database has to call the procedure decreasing the counter - dec_pictures_type_counter.

DROP TRIGGER IF EXISTS lost.on_delete_picture_type CREATE TRIGGER lost.on_delete_picture_type AFTER DELETE ON lost.pictures FOR EACH ROW BEGIN CALL dec_pictures_type_counter(OLD.type_id); END

When the type of a picture is updated the database has to call the dec_pictures_type_counter for the old type and then inc_pictures_type_counter for the new type.

DROP TRIGGER IF EXISTS lost.on_update_picture_type CREATE TRIGGER lost.on_update_picture_type AFTER UPDATE ON lost.pictures FOR EACH ROW BEGIN CALL dec_pictures_type_counter(OLD.type_id); CALL inc_pictures_type_counter(NEW.type_id); END

The new version of Query 1 uses only one table:

select name, _count from types;

and take less than 0,0001 s.

Query 2

The execution plan for this query:

|

id |

select_type |

table |

type |

possible_keys |

key |

key_len |

ref |

rows |

Extra |

|

1 |

SIMPLE |

people |

ALL |

PRIMARY |

NULL |

NULL |

NULL |

12 |

Using temporary; Using filesort |

|

1 |

SIMPLE |

people_pictures |

ref |

people_id |

people_id |

1 |

lost.people.id |

250000 |

Here we can see that the database first reads all people.id and then the table people_pictures is searched for rows where people_pictures.people_id is equal to current people.id. It means that the huge people_pictures table is searches 12 times and every time approximately 250000 rows are processes.

Of course we sense that it would be faster to process the huge table once and join it with people table when necessary. Let’s try to create new version of Query 2 that works this way:

select name, count(people_id) from people_pictures left join people on (people_pictures.people_id=people.id) group by people_id;

This query takes 1,5s and the query execution plan is now as follow:

|

id |

select_type |

table |

type |

possible_keys |

key |

key_len |

ref |

rows |

Extra |

|

1 |

SIMPLE |

people_pictures |

index |

NULL |

people_id |

1 |

NULL |

3000000 |

Using index |

|

1 |

SIMPLE |

people |

eq_ref |

PRIMARY |

PRIMARY |

1 |

lost.people_pictures.people_id |

1 |

The people_pictures table is processed one time. Anyway the execution time is a bit too long. For this query we can use SQL_CACHE. The result set of this query will be cached and recomputed only in case, when any of involved tables changed. (If the caching mode is set to query_cache_type = 2 then you can use the SQL_CACHE hint to tell MySQL which queries to cache.) With the SQL_CACHE hint the execution time is minimized to less than 0,0001 s.

Query 3

Query 3 is the most complex and takes much more time then the others. Let’s see its execution plan:

|

id |

select_type |

table |

type |

possible_keys |

key |

key_len |

ref |

rows |

Extra |

|

1 |

SIMPLE |

people |

ALL |

PRIMARY |

NULL |

NULL |

NULL |

12 |

Using temporary; Using filesort |

|

1 |

SIMPLE |

people_pictures |

ref |

picture_id,people_id |

people_id |

1 |

lost.people.id |

250000 |

|

|

1 |

SIMPLE |

pictures |

eq_ref |

PRIMARY |

PRIMARY |

3 |

lost.people_pictures.picture_id |

1 |

The first used table is people. The optimizer reads people_pictures table for every people.id – 12 times. Only then the people_pictures is joined with pictures.

Since we want to limit the result set to pictures that fulfill certain condition it seems more reasonable to use the table pictures as first. Then we can join only these rows that left with the other two tables. The new version of Query 3 may be like this:

select filename, group_concat(people_id) from pictures left join people_pictures on (people_pictures.picture_id=pictures.id) left join people on (people_pictures.people_id=people.id) group by filename order by date limit 1,20;The time of this query is 42,5 s.

Not bad, but also not good enough. The execution plan:

|

id |

select_type |

table |

type |

possible_keys |

key |

key_len |

ref |

rows |

Extra |

|

1 |

SIMPLE |

pictures |

index |

NULL |

date |

8 |

NULL |

7 |

Using temporary |

|

1 |

SIMPLE |

people_pictures |

ref |

picture_id |

picture_id |

3 |

lost.pictures.id |

3 |

|

|

1 |

SIMPLE |

people |

eq_ref |

PRIMARY |

PRIMARY |

1 |

lost.people_pictures.people_id |

1 |

Using index |

If we think more about it, we may notice, that it maybe better to choose only newest 20 pictures from people_pictures and join the people table with it. This way we could replace one conjunction with subquery. It would be something like this:

select picture_id, group_concat(name) from people_pictures join people on (people_pictures.people_id=people.id) where picture_id in (select id from pictures order by date desc limit 1, 20) group by picture_id;

“Would be” - because MySQL doesn’t allow LIMIT statement in subqueries.

The thing we can do is to split these to two queries and join them in e.g. PHP like this:

$db=new PDO('mysql:host=localhost;dbname=lost-basic', 'root', '', array(PDO::ATTR_PERSISTENT => false, PDO::ATTR_ERRMODE => PDO::ERRMODE_EXCEPTION));

$query = 'select id from pictures order by date desc limit 1, 20;';

$res=$db->query($query);

$pictures_array=array();

foreach($res as $row){

foreach($row as $value){

$pictures_array[]=$value;

}

}

$pictures = implode(', ', $pictures_array);

$query = "select picture_id, group_concat(name) from people_pictures join people on (people_pictures.people_id=people.id) where picture_id in ($pictures) group by picture_id";

$res=$db->query($query);

$res->setFetchMode(PDO::FETCH_NUM);

$res->fetchAll();

$db=null;

This works really fine and takes only 0,003 s.

Summary

As we can see there are many factors that influence the performance of the database but also many solutions for improving the execution time. We manage to decrease time for all queries to less than 0,01 s.

These above ideas are just few from many others. It is very important is to observe the slow queries log. Such queries maybe then analyzed using EXPLAIN statement and then best solution for particular query can be used.

Some other useful information here:

- http://dev.mysql.com/doc/refman/5.5/en/optimization.html

- http://dev.mysql.com/doc/refman/5.0/en/handler.html

- http://www.petefreitag.com/item/613.cfm

- http://forge.mysql.com/wiki/MySQL_Internals_Algorithms